Developing high-performance software is a complex task that requires specialized expertise. GSO (Global Software Optimization) is a benchmark for evaluating language models' capabilities in developing high-performance software. We develop an automated pipeline that generates and executes synthetic end-to-end performance tests to analyze repository commit histories.

We identify 102 challenging optimization tasks across 10 codebases, spanning diverse domains and programming languages. In each GSO task, an agent is provided with a codebase and a performance test as a precise specification, and tasked to improve the runtime efficiency. Evaluation involves measuring the correctness of the model-generated patch's and its performance with respect to the expert developer commit that serves as a target.

Features

Long-Horizon Task

Beyond SWE, GSO requires identifying bottlenecks and planning optimization strategies over a long horizon.

Construction Methodology

Our automated execution-based framework generates performance tests and candidate tasks, which are then manually validated to ensure diversity, complexity, and resistance to model behaviours like reward hacking.

Precise Specification

Performance tests serve as precise, automated specifications that unambiguously (unlike GitHub issues) define optimization tasks for rigorous evaluation.

Substantial Multi-Language Changes

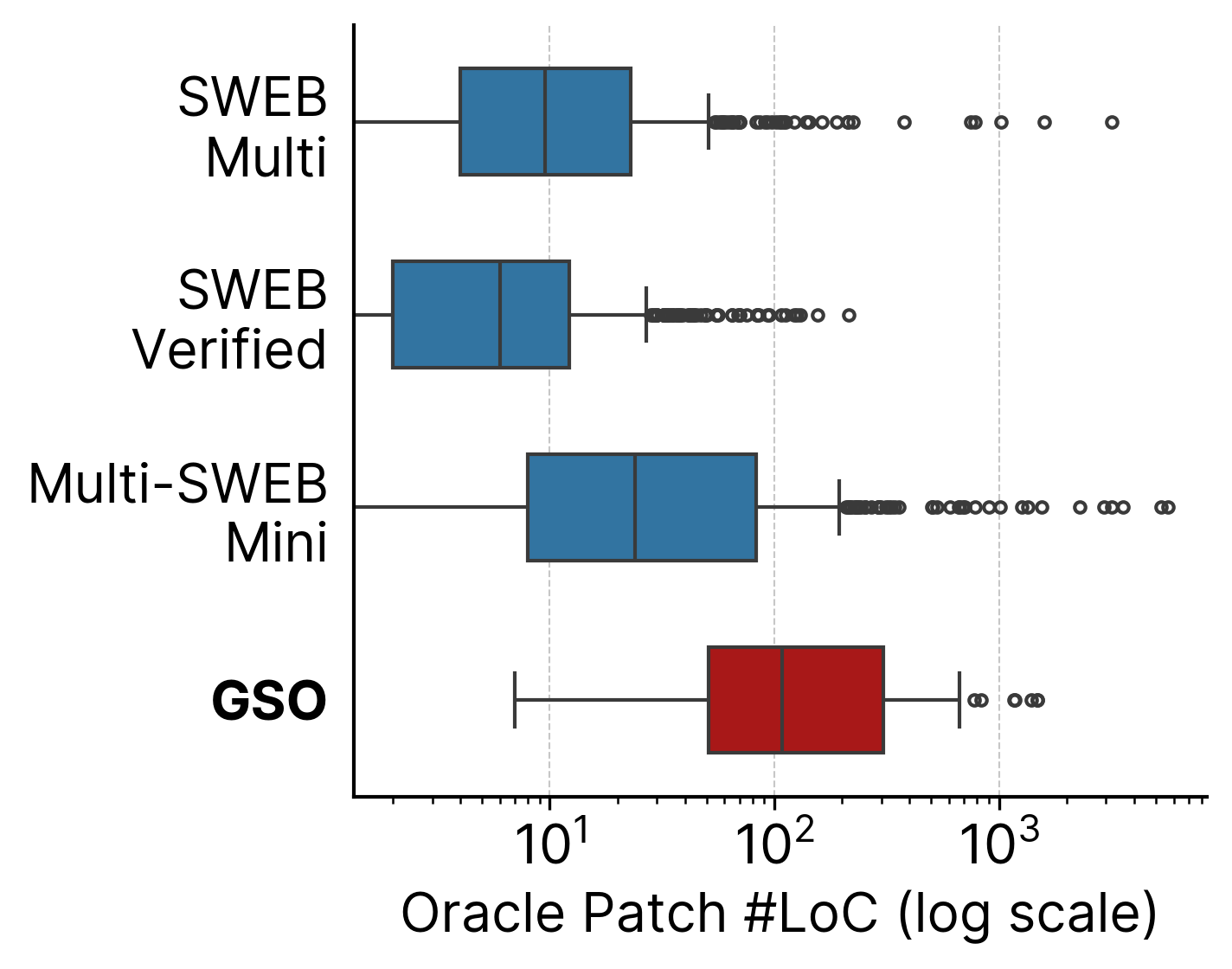

Tasks span 5 languages across 8 domains—~60% require non-Python edits—and oracle patches span multiple files/functions, demanding up to 15× more edits than existing SWE benchmarks.

Results

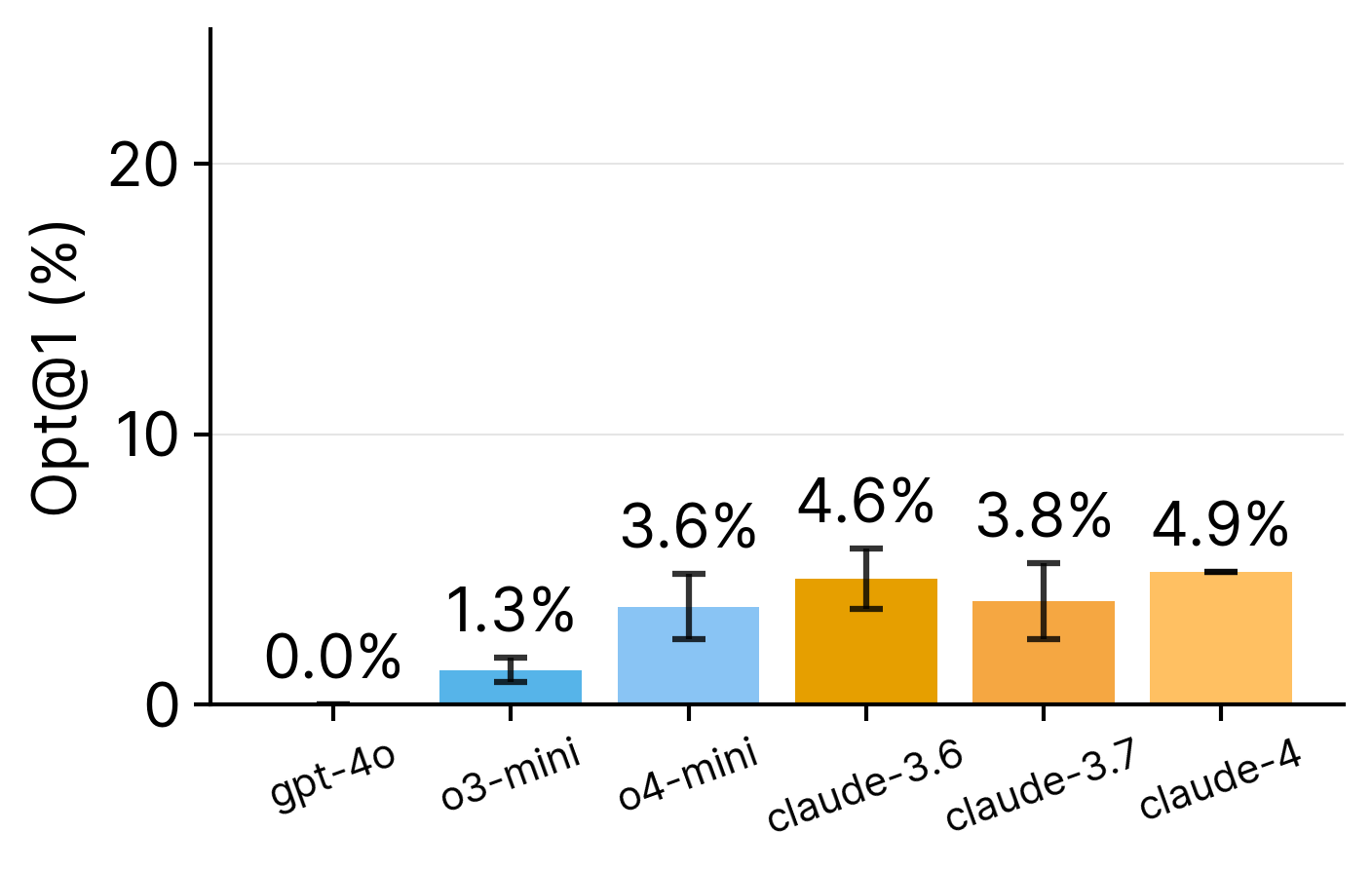

Opt@1

Our evaluation reveals that current SWE-Agents struggle significantly with software optimization tasks. Even the best performing model, Claude-4.0, achieves less than 5% success rate on Opt@1, while GPT-4o fails completely at 0.0%.

These results demonstrate that success on existing SWE benchmarks does not transfer to more challenging real-world software tasks requiring both algorithmic reasoning and engineering expertise. The performance gap highlights the substantial challenges in bridging algorithmic coding with systems-level optimization.

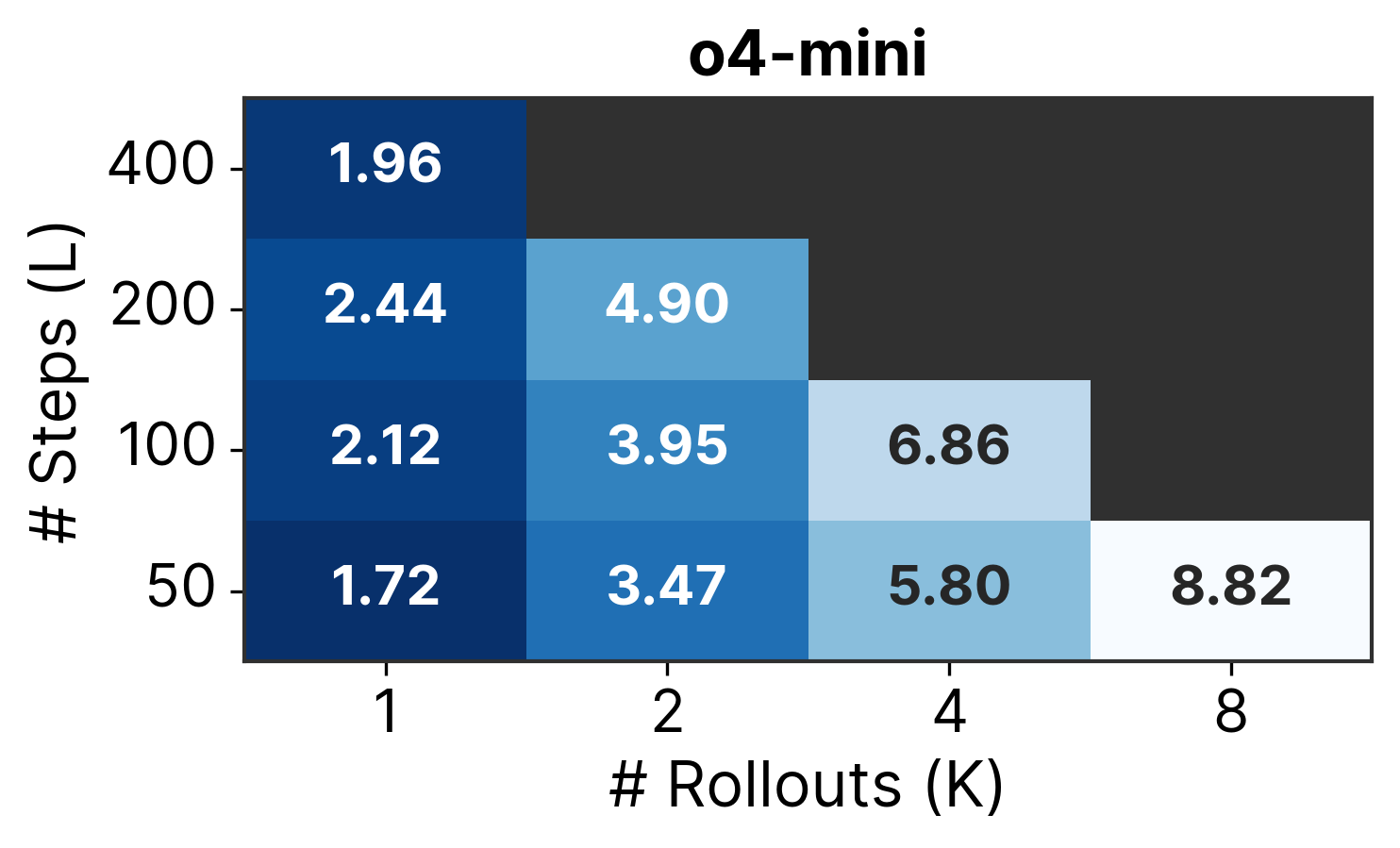

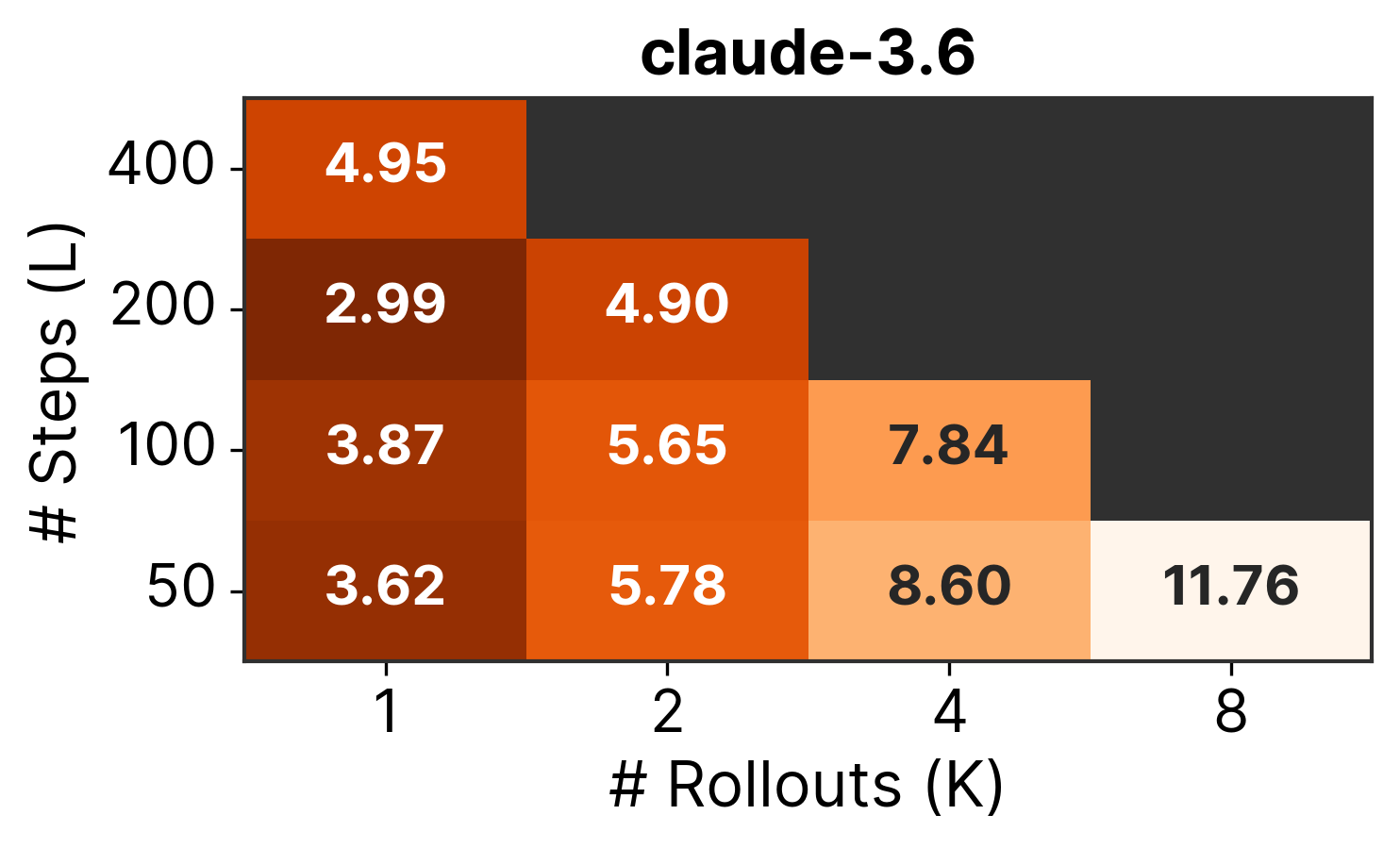

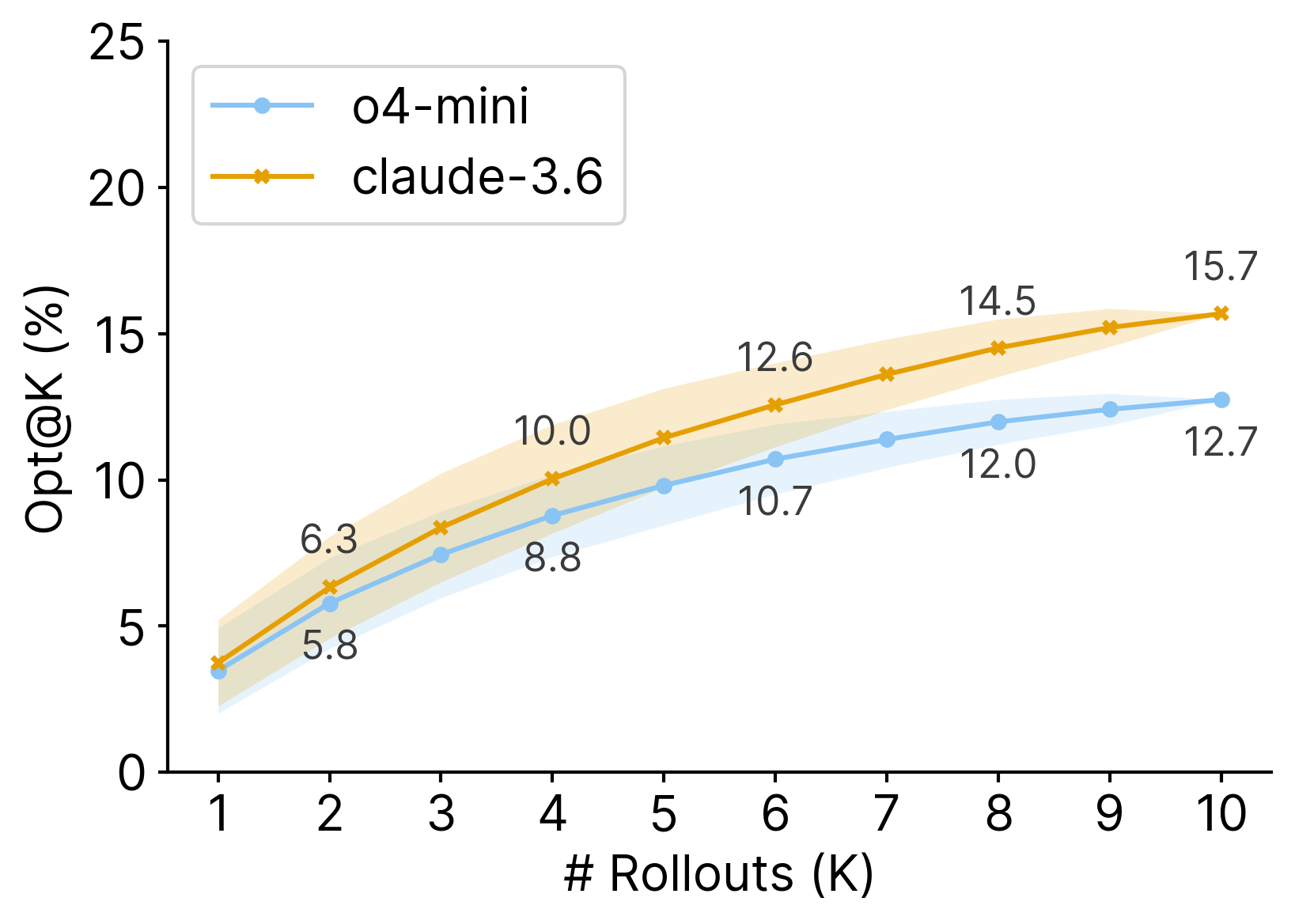

Scaling Test-Time Compute

Our experiments suggest that parallel compute (multiple rollouts) scales more efficiently than serial compute (more steps per rollout). With only 50 steps, 8 rollouts yields higher performance than 400 steps with a single rollout. Furthermore, Opt@K improves with more compute to 15%, with diminishing returns. Despite these improvements, Opt@10 performance remains modest (under 20%) for both models, indicating fundamental limitations in current SWE-Agents.

Qualitative Analysis

We further classify model failures to understand how SWE-Agents fail.

Explore some examples of GSO tasks and model attempts.

Pillow TIFF Frame Counting Optimization

Task: Optimize Pillow's TIFF image handling for n_frames and is_animated properties.

Agent Approach: O4-Mini replaced inefficient frame-by-frame loading with direct binary traversal of TIFF's IFD pointers, reading only essential metadata. The optimization reduced complexity from O(n²) to O(n) by skipping tag parsing and frame decompression entirely. It achieves a ~4.2x speedup on multi-frame TIFF processing

Optimized Implementation

# Fast count IFD entries without decoding tags

fp = self.fp

orig_pos = fp.tell()

endian = self.tag_v2._endian

offset = self.__first

count = 0

while offset:

count += 1

fp.seek(offset)

entry_count_data = fp.read(2)

num_entries = struct.unpack(endian + "H", entry_count_data)[0]

# Skip entries and read next IFD offset

fp.seek(offset + 2 + num_entries * 12)

next_offset_data = fp.read(4)

offset = struct.unpack(endian + "L", next_offset_data)[0]

self._n_frames = countNumPy ufunc.at Python Override

Agent Approach: O4-Mini attempted to optimize NumPy's ufunc.at by creating a Python override in __init__.py instead of modifying the underlying C implementation.

Why it Failed: The agent avoided the required deeper C-level changes and instead tried to override with a Python function, completely missing the performance-critical ufunc layer. The human commit implemented proper C++ ufuncs with BLAS acceleration and optimized memory access patterns.

Lesson: Agents often resort to superficial Python-level patches when deep systems knowledge is required.

Agent's Failed Approach (simplified)

# __init__.py

# Monkey-patch ufunc.at for faster add/subtract operations

_orig_ufunc_at = ufunc.at

def _at_fast(self, a, indices, values=None):

...

# Only optimize for 1D numpy arrays

if name in ('add', 'subtract') and isinstance(a, np.ndarray) and a.ndim == 1:

# Use np.bincount for optimization

return np.bincount(indices, weights=values, minlength=len(a))

...

return _orig_ufunc_at(self, a, indices, values)

ufunc.at = _at_fastSIMD Segmentation Fault

Agent Approach: Claude-3.5-V2 attempted to optimize Pillow's image reduction with AVX2 SIMD vectorization and OpenMP parallelization.

Why it Failed: The implementation had unsafe memory access patterns at image boundaries and inconsistent function interfaces, causing segmentation faults. The developer commit uses careful hand-crafted SIMD with proper boundary handling and data alignment.

Lesson: Low-level SIMD programming requires precise memory management that current models struggle with.

Error Output

timeout: the monitored command dumped core

/eval.sh: line 53: 973 Segmentation fault timeout 300s python "$test_file"

# Agent added unsafe AVX2 vectorization:

+ Added vectorized pixel processing (8 pixels at once)

+ Added edge case handling code

+ Added function pointers for different reduction strategies

- Removed redundant code in specialized reduction functionsSpurious Compiler Flag Optimization

Agent Approach: O4-mini attempted to optimize Pillow's alpha compositing by simply adding -O3 and -mavx2 compiler flags to setup.py.

Why it Failed: The Pillow project already uses optimized builds by default. This approach shows fundamental misunderstanding of real-world project configurations. On the other hand, the ground-truth commit hand-crafted AVX2 and SSE4 intrinsics with specialized shuffle masks and tiered fallback approach.

Lesson: Agents often attempt trivial build-system changes instead of real algorithmic improvements.

Agent's Naive Change

ext_modules = [

- Extension("PIL._imaging", files, extra_compile_args=["-msse4"]),

+ Extension("PIL._imaging", files, extra_compile_args=["-mavx2", "-O3"]),

Extension("PIL._imagingft", ["src/_imagingft.c"]),

Extension("PIL._imagingcms", ["src/_imagingcms.c"]),

Extension("PIL._webp", ["src/_webp.c"]),

]